Understanding DPDzero

DPDzero is a Collections As a Service that enables scalable collections for banks, fintechs, NBFCs, and MFIs, effortlessly. We facilitate the recovery of debt across a wide range of loan products and delinquency stages, making it easier for financial institutions and their collection teams to achieve their goals.

The Challenge

One of our core functionalities is sending a large number of messages daily. As our business expanded the volume of messages being sent also increased significantly. Additionally, timely and effective communication is crucial for engaging customers, sending payment reminders, and ultimately driving successful debt recovery. Any delays, failures, or inconsistencies in message delivery can lead to missed payment reminders, reduced customer compliance, and increased delinquency rates, directly impacting revenue and cash flow.

Along with this, inefficient messaging operations—such as breaching provider rate limits or failing to optimize vendor utilization—can lead to higher operational costs, affecting profit margins. Inconsistent vendor volume commitments can also weaken our negotiating power, leading to less favorable commercial terms.

Therefore, optimizing the messaging system was essential not only for ensuring reliable communication but also for maximizing cost efficiency, maintaining profitability, and sustaining strong vendor relationships. Initially we identified below challenges to tackle,

1. Burst requests

Campaigns were run at various intervals daily, leading to frequent bursts in messaging requests. These bursts strained our system’s ability to process messages uniformly, risking delays and affecting engagement rates.

2. Rate-limits by the provider(s)

Another significant challenge was dealing with the rate limits imposed by messages provider(s). Because of frequent bursts, message processing was not uniform and the system used to breach rate-limits very often, causing delay in overall processing and poor utilisation.

Initially we had tried to build queuing mechanism within the main service itself, but due to all the resources such as cache, queue and DB being shared with other functionalities, we were facing resource load issues. To mitigate this, we would switch from one provider to another as soon as one of the provider would give us a rate limit error. However, due to pricing differences between vendors, our costs were higher than what it could be. We had also committed volumes to some of the vendors in return for better commercials but we could not meet the volume commitments due to frequent redirections.

Also for some type of services such as IVR, one of the vendor’s pricing was fixed while the other was volume based which caused frequent rate-limits with fixed price vendor. So we wanted to optimise our cost by effectively utilising vendors’ services without hitting frequent rate-limits.

3. Handling massive message volumes

The main challenge was to have our system handle a massive number of requests with minimal delay and reprocess in case of failure. Most of the work in our application happens in async manner and scaling celery was proving to be expensive and challenging. During rate limits, the celery would just re-queue the messages to a different vendor and this would at times overwhelm the queue and we would end up spawning a lot of instances of the celery worker to just process the queue.

4. Lack of granularity and separation of concerns

Since the functionality was tightly coupled with the main service, it was difficult to have fine-grained control over individual messages.

To address the above mentioned challenges, we decided to separate the vendor messaging functionality from the main service and build a Microservice based messaging system with Go. Robust concurrency support and scalability were the main reasons for opting Go.

Initially we implemented a proxy service in Gofiber to handle all the messaging requests. For handling burst requests we made use of AWS SQS (Simple queuing service). We had to design a consumer to poll messages from the queue and process them. To meet the scalability requirements we had to process as many as 10k requests per minute. But SQS had a limitation of max 10 messages per poll. To address this limitation we implemented workers using goroutines and designed our system to concurrently poll SQS and process them.

To prevent the rate-limits from provider we had to implement self-limit mechanism in our system such a way that maximum possible requests are processed without breaching the rate-limit. Initially we implemented self-limit with fixed window algorithm using redis as caching service. Even though the self-limit was enforced, due to clock synchronisation issues with the provider(s) we had to come up with more reliable algorithm. Instead of keeping self-limit logic inside the service, we used Lua script and implemented sliding window algorithm with millisecond level precision, which allowed to have robust self-limit.

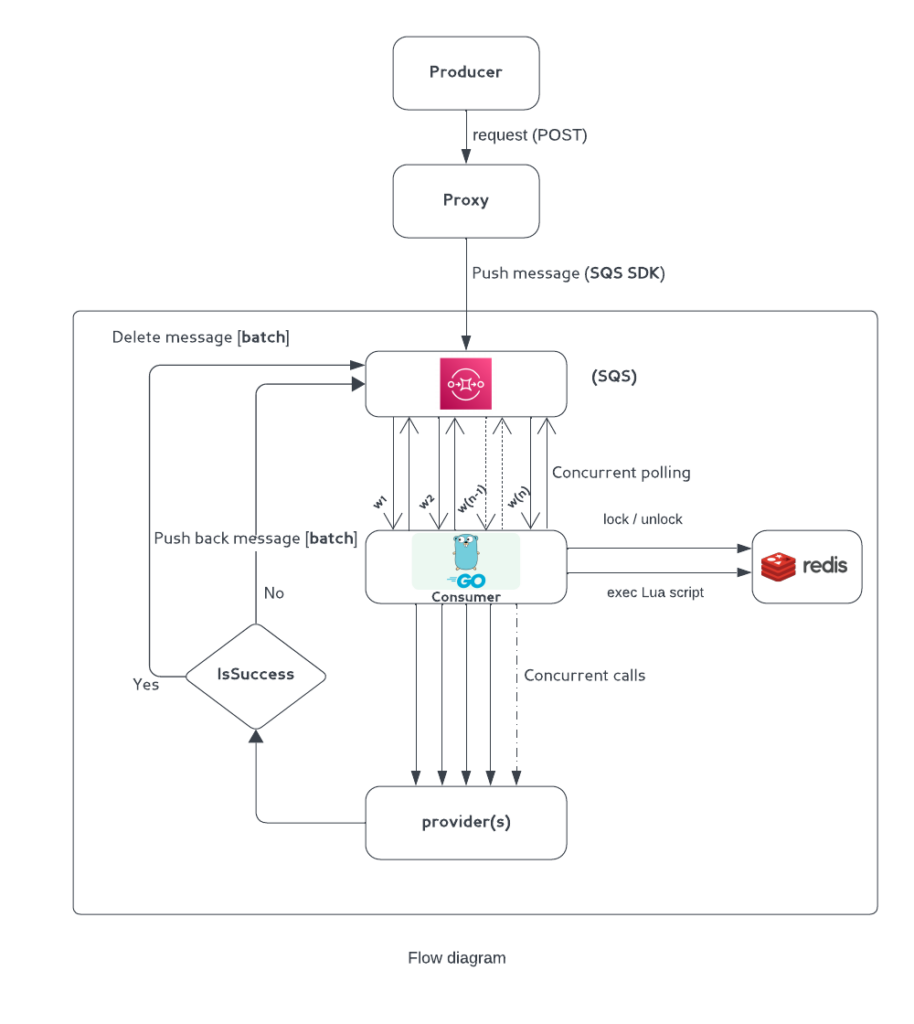

Flow diagram

- Proxy A new proxy service to handle all the incoming messaging requests and push them to AWS SQS along with support APIs

- Producer Producer is the main service which calls the proxy for pushing messaging requests to SQS.

- Consumer

- The consumer was designed to process individual messages with granular control. Since SQS allows at max 10 messages per poll, we leveraged Go’s robust concurrency and implemented multiple workers for concurrently polling messages from SQS and processing them.

- To prevent rate-limits we needed to handle rate-limit checks for each request effectively at milli-seconds levels. Since Lua works great with redis and guarantees atomicity, we implemented the sliding window algorithm with Lua script and cached it in redis server, to have a self-limit and wait time effectively.

Upon implementing this, we have a fine grained control over messaging provider, better handling of burst request and better utilisation of vendor services. The new system has significantly enhanced our debt collection platform’s scalability, cost efficiency, and operational agility. By ensuring timely and reliable communication, we improved borrower engagement and recovery rates, directly impacting revenue and reducing delinquency. Additionally, optimized vendor utilization and cost management contributed to better profit margins and strengthened vendor relationships.

- Increased Recovery Rates: Reliable and timely delivery of payment reminders improved customer compliance, leading to higher recovery rates and better cash flow.

- Cost Optimization: Efficient utilization of committed volumes minimized vendor switching, reducing messaging costs and enhancing profit margins.

- Enhanced Scalability and Efficiency: Leveraging Go’s concurrency allowed us to process high message volumes seamlessly, ensuring consistent communication and superior user experience.

- Operational Flexibility and Agility

The decoupled architecture provided granular control over message processing, allowing us to prioritize critical payment reminders and adapt messaging strategies in real-time.